Recently, Amazon launched its AI chatbot Amazon Q, sparking interest from companies but also concerns among employees over serious accuracy and privacy issues. Q was reported for having “hallucinations of not poco tale” and disclosed confidential data, raising questions about the security of company information. Here are the details disclosed by Platformer.

Security alert for Amazon Q: between confidential data and incorrect answers

Amazon introduced its AI chatbot, called Q, three days ago, but not everything seems to be going as planned. Some employees have raised the alarm over issues of accuracy and privacy: Amazon Q would have manifested "hallucinations" and revealed information confidential, such as the location of AWS data centers, internal discount programs, and features not yet released.

This information emerged from documents leaked and obtained by Platformer, highlighting a Level 2 security issue, a serious situation that requires urgent intervention by engineers.

Despite this, Amazon has downplayed the importance of these internal discussions. A spokesperson said the sharing of feedback through internal channels is standard practice at Amazon and that no security issues have been identified as a result of this feedback. The company underlined the importance of the feedback received and the desire to continue refine Amazon Q as it transitions from preview to general availability.

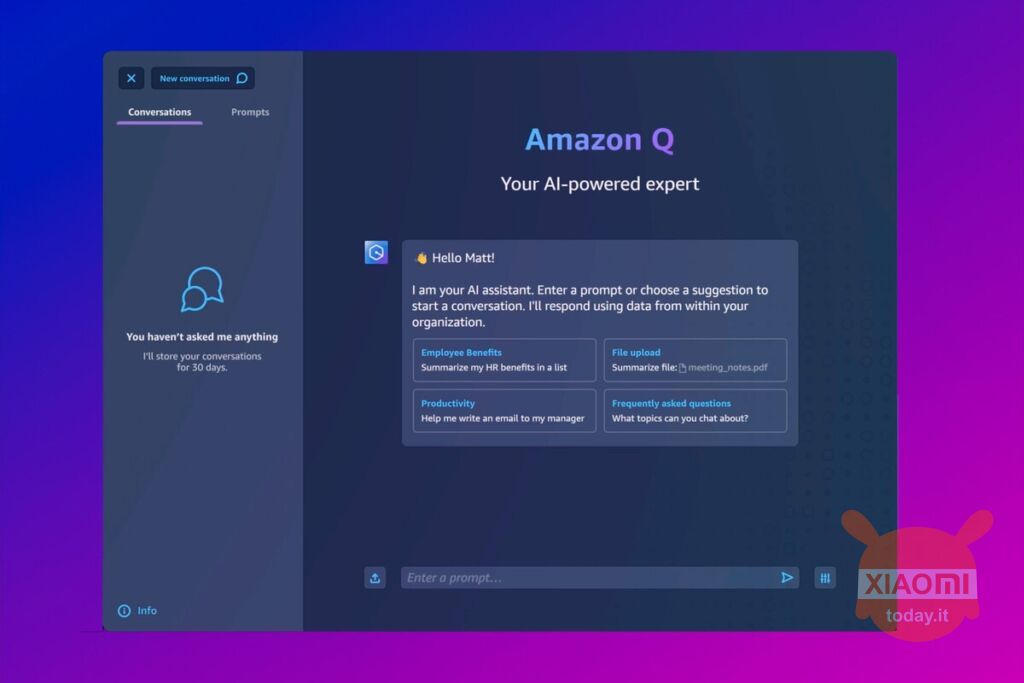

Amazon Q, currently available in a free preview, was presented as a enterprise software version of a chatbot, with initial functions aimed at answering developer questions about AWS, modifying source codes and citing sources. Amazon has emphasized Q's security and privacy, contrasting it with consumer-grade tools like ChatGPT.

However, an internal document revealed that Q can “hallucinate” and provide harmful or inappropriate responses, such as outdated security information which could put customer accounts at risk. These risks are typical of large language models, all of which tend to provide incorrect or inappropriate responses at least on some occasions.

Through | Slashdot